With Google’s Pixel event coming to a conclusion, we now have a comprehensive look at what AI upgrades are coming to the Pixel 9 devices, as well as a fair understanding of what Apple’s plans are for AI (or Apple Intelligence) on their upcoming iPhone 16 series. Although neither of these features has launched yet (both Google and Apple plan on rolling out their multimodal AI assistants later this year), it’s fair to quickly compare how they stack up against each other, and whether it makes sense to go the Pixel or the iPhone route this smartphone season.

On-Device Models

Both Google and Apple boast the ability to handle AI queries on the device itself, which means all the AI processing is happening on your phone instead of a server on the cloud. For Pixel phones, this is courtesy Gemini Nano, Google’s on-board multimodal AI model, while for Apple, they just call it Apple Intelligence instead of bogging you down with model names and version names. When the phone can’t handle a task on-device, it accesses the cloud – something common to both Gemini and Apple Intelligence. However, every single thing Gemini does is handled by Google’s own AI model, whereas for Apple, some tasks get outsourced to ChatGPT, without logging any private data… but more on privacy later. Google benefits from owning the entire ‘stack’, whereas Apple benefits from being able to be truly diverse by leaning on ChatGPT’s capabilities when it finds itself lacking.

Multimodality

The term multimodality refers to the ability to work across different modes – text, video, and audio. Both Gemini and Apple Intelligence are designed to be multimodal. They accept text input, can take voice commands, can analyze audio files, inspect images, and can even search within videos. It’s worth noting that while Google and Apple both announced these multimodal capabilities, the final AI assistants are still weeks/months away from launch.

Language Input

Apple Intelligence has only been demonstrated to work in English as of this writing. Google’s Gemini, however, holds the edge by accepting 45+ different languages as input.

The Gemini ‘Feature Quilt’

Text Generation/Rewrite

This might be the simplest yet most effective feature on both platforms, and one you’ll find yourself using the most. Text generation and rewriting isn’t new for Gemini at all, and is available across all of Google’s own apps/services regardless of your device. You can prompt Gemini to compose emails, write letters/flyers, and even proofread your documents, either within the Gemini website or across apps like Gmail, Docs, etc. Apple Intelligence offers the same features too (refining, proofreading, rewriting), but these capabilities seem limited only to Apple devices like the iPhone, iPad, MacBook, and Mac desktops. While Apple Intelligence’s text-generation features are yet to make their debut on Apple devices, Google’s Gemini-powered features are already available across all devices regardless of their make.

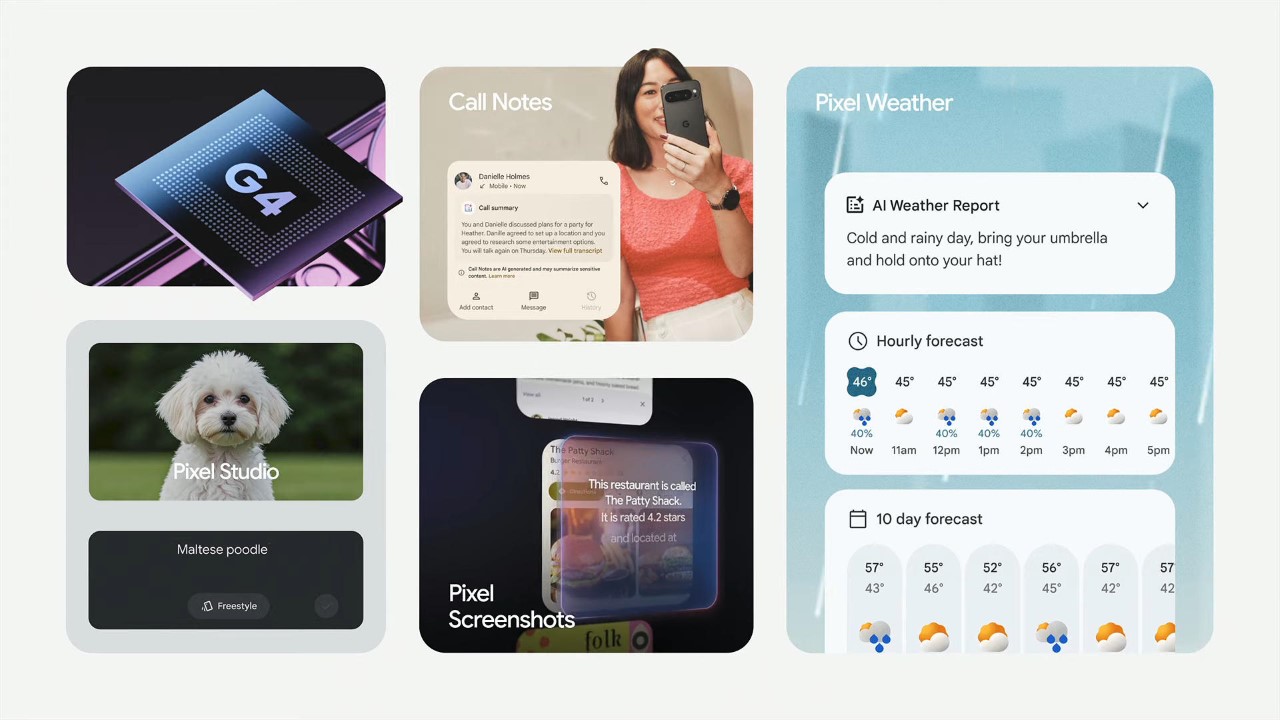

Image Generation

Aside from just analyzing images, Gemini and Apple Intelligence have image generation features too, with their own different abilities. The Pixel Studio text-to-image feature was unveiled today, limited to Pixel 9 models only. Type a prompt out and the app creates an image that you can then view variants of, refine by refining the prompt, or even change the style by choosing from a selection of style templates. At WWDC this year, Apple unveiled their Image Playground which has the exact same capabilities. However, the Image Playground offers fewer styles to choose from, staying consciously away from generating realistic images. Google’s Pixel Studio can generate photorealistic images, although the company also unveiled tools to detect AI images earlier, which may play a crucial role in minimizing the spread of deepfakes and misinformation.

Additionally, Google’s Magic Editor (its upgrade to the Magic Eraser) is set to debut on Pixel phones and also see a broader Android (and even iOS) rollout. The feature lets you correct your photo by adjusting parameters like reframing or expanding the composition, or edit parts of your photo by adding GenAI details to them. Google, however, doesn’t get Apple’s GenMoji, or the ability to generate custom emojis based on prompts and context. These custom emojis can be shared on Apple’s Messages app, but can also be saved as stickers and used on other messaging apps like WhatsApp and Instagram.

Live Mode

Debuted at Google’s Pixel Launch event today, Gemini’s Live Mode lets you talk to your AI the way you would another person. If you remember ChatGPT’s GPT 4o model from not too long ago, that’s what the Live Mode lets you do. You can summon the AI and just have a conversation with it, asking it questions, sparring on ideas, or instead collaborating over them. This feature is currently available only in English, and to Gemini Advanced subscribers, which means free users won’t be able to tap into the Live Mode feature. Apple Intelligence doesn’t have a Live Mode as of now, but you could expect it to drop in the future as soon as the intelligence features actually begin rolling out. Notably, ChatGPT lets you chat with the GPT 4o model for free, but it’s limited to a ‘few’ times within a limited time window.

Call Record/Transcribe

Both Gemini and Apple Intelligence boast the ability to record and transcribe conversations. Recorded calls get transcribed using on-device AI, and all participants are notified that the call is being recorded. Apple, however, adds transcriptions to the Notes app, while Google lets you view the transcriptions right within the Call Log using a feature called Call Notes.

The Apple Intelligence ‘Feature Quilt’

Memory + Context

What good is an AI if it doesn’t remember your conversations? Both Gemini and Apple Intelligence ‘allegedly’ get context very well by understanding what you require, which apps need to be referenced, and also people within your contacts. You can have a steady conversation with both AI models, and they’ll remember what you’re talking about without constantly needing to be reminded. This is in major contrast to just a few years ago when Voice Assistants only had a limited memory that existed only within that chat command. Now you can reference something from an image taken years ago, or details from an email buried in your inbox, and the AI models will get working without missing a heartbeat. Or at least that’s what Apple and Google will have us believe. We’re still waiting to see these features roll out on devices, and can only understand their limitations once they do.

Privacy

A lot of AI talk is also supplemented by a fair amount of fearmongering. AI replacing humans, AI training itself on your data, and AI going rogue – all valid concerns that Google and Apple understand rather well. To that end, a lot of the Pixel 9 Gemini Nano and Apple Intelligence’s features occur on device, without even connecting to the internet (the Gemini Nano on-device AI is different from the Gemini available on other Android devices). However, when Gemini or Apple Intelligence needs to access an on-cloud version of the AI model, it happens within its own sandbox, without having any data be accessible to any third parties. Notably, Apple also relies on ChatGPT to power some of its AI experiences, although Craig Federighi was quick to mention that ChatGPT doesn’t (or cannot) log any data or queries inputted through Apple Intelligence.

Availability

As far as availability goes, Google’s Gemini Nano model will only be available on Pixel 9 devices, with an official release said to occur weeks from now. While Gemini will be available across all devices, the ability to use Gemini as a personal smartphone assistant is limited only to the latest Pixel 9 range. Apple Intelligence awaits a formal launch too, and will be available on the iPhone 15 Pro series from last year, along with this year’s iPhone rollout. EU users, unfortunately, will not be able to use Apple Intelligence features on the iPhone following the EU’s strict DMA (Digital Markets Act) legislation.

Neither Gemini Nano nor Apple Intelligence have an official launch date, but chances are they’ll both debut in the weeks/months to come. Apple Intelligence will be free for all users (you can even use the ChatGPT features for free without making an account), and Gemini Nano’s core features will be free for Pixel users too. Pixel users also get a 1-year access pass to Gemini Advanced, which can handle more complex tasks, has a larger context window, and allows you to use the Gemini Live Mode.

Watch the official videos on Gemini Nano and Apple Intelligence below.

The post Google Gemini Nano vs Apple Intelligence: Which AI Assistant is Better? first appeared on Yanko Design.

0 Commentaires