As if we haven’t had our fill of buzz-worthy terms like “eXtended Reality” or the “Metaverse,” Apple came out with a new product that pushed yet another old concept into the spotlight. Although the theory behind spatial computing has been around for almost two decades now, it’s one of those technologies that needed a more solid implementation from a well-known brand to actually hit mainstream consciousness. While VR and AR have the likes of Meta pushing the technologies forward, Apple is banking more heavily on mixed reality, particularly spatial computing, as the next wave of computing. We’ve already explained what Spatial Computing is and even took a stab at comparing the Meta Quest Pro with the new kid on the block, the Apple Vision Pro. And while it does seem that Spatial Computing has a lot of potential in finally moving the needle forward in terms of personal computing, there are still some not-so-minor details that need to be ironed out first before Apple can claim complete victory.

Designer: Apple

Spatial Computing is the Future

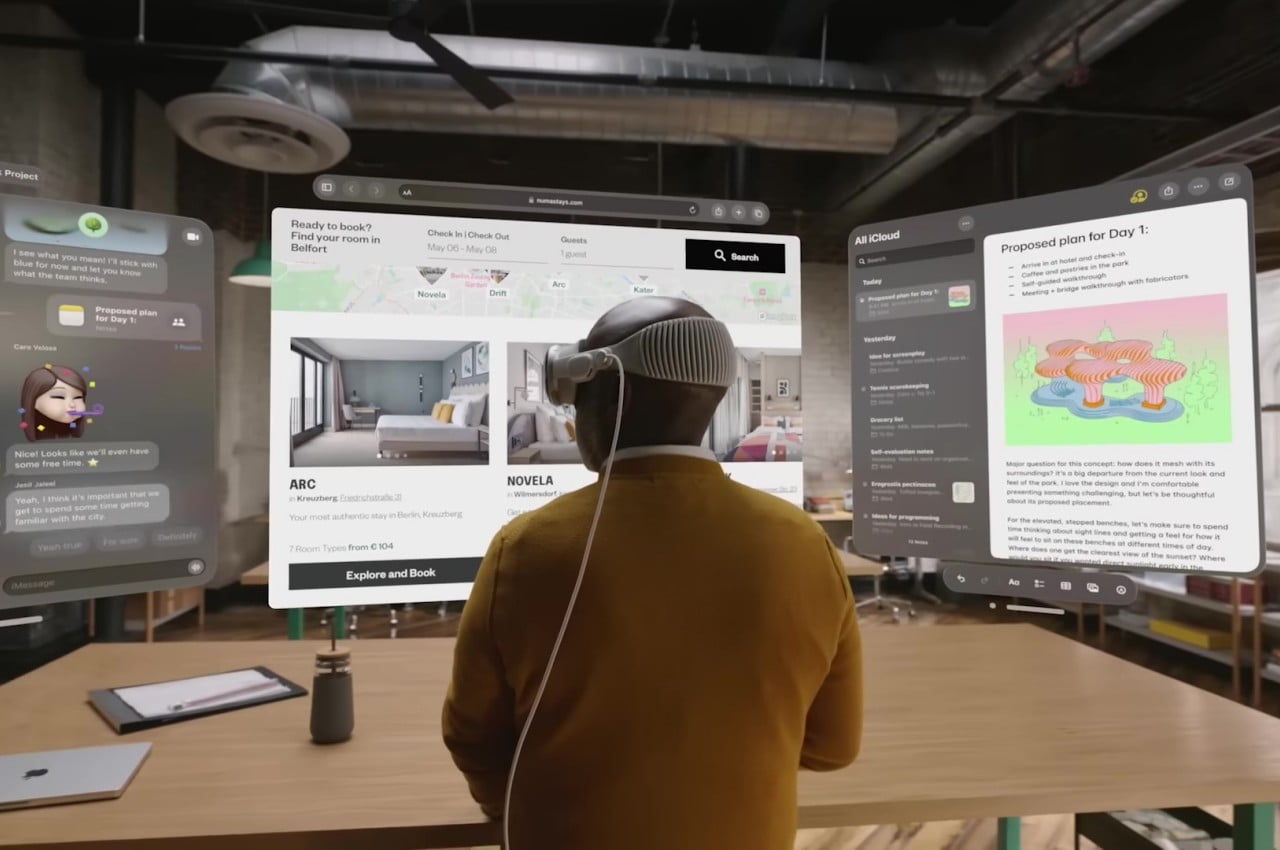

As a special application of mixed reality, Spatial Computing blurs the boundaries between the physical world and the applications that we use for work and play. But rather than just having virtual windows floating in mid-air the way VR and AR experiences do it, Apple’s special blend of spatial computing lets the real world directly affect the way these programs behave. It definitely sounds futuristic enough, but it’s a future that is more than just fantasy and is actually well-grounded in reality. Here are five reasons Spatial Computing, especially Apple’s visionOS, is set to become the next big thing in computing.

Best of Both Realities

Spatial computing combines the best of VR and AR into a seamless experience that will make you feel as if the world is truly your computer. It doesn’t have the limitations of VR and lets you still see the world around you through your own eyes rather than through a camera. At the same time, it still allows you to experience a more encapsulated view of the virtual world by effectively dimming and darkening everything except your active application. It’s almost like having self-tinting glasses, except it only affects specific areas rather than your whole view.

More importantly, spatial computing doesn’t just hang around your vision the way AR stickers would. Ambient lighting affects the accents on windows, while physical objects can change the way audio sounds to your ears. Virtual objects cast shadows as if they’re physically there, even though you’re the only one that can see them. Given this interaction between physical and virtual realms, it’s possible to have more nuanced controls and devices in the future that will further blur the boundaries and make using these spatial apps feel more natural.

Clear Focus

The term “metaverse” has been thrown around a lot in the past years, in no small part thanks to the former Facebook company’s marketing, but few people can actually give a solid definition of the term, at least one that most people will be able to understand. To some extent, this metaverse is the highest point of virtual reality technologies, a digital world where physical objects can have some influence and effect. Unfortunately, the metaverse is also too wild and too amorphous, and everyone has their own idea or interpretation of what it can or should be.

In contrast, spatial computing has a narrower and more focused scope, one that adds a literal third dimension to computing. Apple’s implementation, in particular, is more interested in taking personal computing to the next level by freeing digital experiences from the confines of flat screens. Unlike the metaverse, which almost feels like the Wild West of eXtended reality (XR) these days, spatial computing is more content on doing one thing: turning the world into your desktop.

Relatable Uses

As a consequence of its clearer focus, spatial computing has more well-defined use cases for these futuristic-sounding features. Apple’s demo may have some remembering scenes from the Minority Report film, but the applications used are more mundane and more familiar. There are no mysterious and expensive NFTs, or fantastic walks around Mars, though the latter is definitely possible. Instead, you’re greeted by familiar software and experiences from macOS and iOS, along with the photos, files, and data that you hold dear every day.

It’s easy enough to take this kind of familiarity for granted, but it’s a factor that sells better over a longer period of time. When the novelty of VR and the metaverse wear off, people are left wondering what place these technologies will have in their lives. Sure, there will always be room for games and virtual experiences that would be impossible in the physical world, but we don’t live in those virtual worlds most of the time. Spatial computing, on the other hand, will almost always have a use for you, whether it’s entertainment or productivity because it brings the all-familiar personal computing to the three-dimensional physical world.

Situational Awareness

One of the biggest problems with virtual reality is that they can’t really be used except in enclosed or safe spaces, often in private or at least with a group of trusted people. Even with newer “passthrough” technologies, the default mode of devices like the Meta Quest Pro is to put you inside a 360-degree virtual world. On the one hand, that allows for digital experiences that would be impossible to integrate into the real world without looking like mere AR stickers. On the other hand, it also means you’re shutting out other people and even the whole world once you put on the headset.

The Apple Vision Pro has a few tricks that ironically make it more social even without the company mentioning a single social network during its presentation. You can see your environment, which means you’ll be able to see not only people but even the keyboard and mouse that you need to type an email or a novel. More importantly, however, other people will also be able to see your “eyes” or at least a digital twin of them. Some might consider it gimmicky, but it shows how much care Apple gives to those subtle nuances that make human communication feel more natural.

Simpler Interactions

The holy grail of VR and AR is to be able to manipulate digital artifacts with nothing but your hands. Unfortunately, current implementations have been stuck in the world of game controllers, using variants of joysticks to move things in the virtual world. They’re just a step away from using keyboards and mice, which creates a jarring disjunct between the virtual objects that almost look real in front of our eyes and the artificial way we interact with them.

Apple’s spatial computing device simply uses hand gestures and eye tracking to do the same, practically taking the place of a touchscreen and a pointer. Although we don’t actually swipe to pan or pinch to zoom real-world objects, some of these gestures have become almost second nature thanks to the popularity of smartphones and tablets. It might get a bit of getting used to, but we are more familiar with the direct movements of our hands compared to memorizing buttons and triggers on a controller. It simplifies the vocabulary considerably, which places less burden on our minds and helps reduce anxiety when using something shiny and new.

Spatial Computing is Too Much into the Future

Apple definitely turned heads during its Vision Pro presentation and has caused many people to check their bank accounts and reconsider their planned expenses for the years ahead. As expected of the iPhone maker, it presented its spatial computing platform as the next best thing since the invention of the wheel. But while it may indeed finally usher in the next age of personal computing, it might still be just the beginning of a very long journey. As they say, the devil is in the details, and these five are those details that could see spatial computing and the Apple Vision Pro take a back seat for at least a few more years.

Missing Haptics

We have five (physical) senses, but most of our technologies are centered around visual experiences primarily, with audio coming only second. The sense of touch is often taken for granted as if we were disembodied eyes and ears that use telekinesis to control these devices. Futuristic designs that rely on “air gestures” almost make that same assumption, disregarding the human need to touch and feel, even if just a physical controller. Even touch screens, which have very low tactile feedback, are something physical that our fingers can touch, providing that necessary connection that our brains need between what we see and what we’re trying to control.

Our human brains could probably evolve to make the need for haptic feedback less important, but that’s not going to happen in time to make the Apple Vision Pro a household item. It took years for us to even get used to the absence of physical keys on our phones, so it might take even longer for us to stop looking for that physical connection with our computing devices.

Limited Tools

The Apple Vision Pro makes use of simpler hand gestures to control apps and windows, but one can also use typical keyboards and mice with no problem at all. Beyond these, however, this kind of spatial computing takes a step back to the different tools that are already available and in wide use on desktop computers and laptops. Tools that take personal computing beyond the typical office work of preparing slides, typing documents, or even editing photos. Tools that empower creators who design both physical products as well as the digital experiences that will fill this spatial computing world.

A stylus, for example, is a common tool for artists and designers, but unless you’re used to non-display drawing tablets, a spatial computing device will only get in the way of your work. While having a 3D model that floats in front of you might be easier to look at compared to a flat monitor, your fingers will be less accurate in manipulating points and edges compared to specialized tools. Rather than deal breakers, there are admittedly things that can be improved over time. But at the launch of the Apple Vision Pro, spatial computing applications might be a bit limited to those more common use cases, which makes it feel like a luxurious experiment.

Physical Strain

Just as our minds are not used to it, our bodies are even more alien to the idea of wearing headsets for long periods of time. Apple has made the Vision Pro as light and as comfortable as it can, but unless it’s the size and weight of slightly large eyeglasses, they’ll never really be that comfortable. Companies have been trying to design such eyewear with little success, and we can’t really expect them to make a sudden leap in just a year’s time.

Other parts of our bodies might also feel the strain over time. Our hands might get sore from all the hand-waving, and our eyes could feel even more tired with the high-resolution display so close to our retinas. These health problems might not be so different from what we have today with monitors and keyboards, but the ease of use of something like the Vision Pro could encourage longer periods of exposure and unhealthy lifestyles.

Accessibility

As great as spatial computing might sound for most of us, it is clearly made for the majority of able-bodied and clear-seeing people. Over the years, personal computing has become more inclusive, with features that enable people with different disabilities to still have an acceptable experience, despite some limitations. Although spatial computing devices like the Vision Pro do make it easier to use other input devices such as accessibility controllers, the very design of headsets makes them less accessible by nature.

Affordability

The biggest drawback of the first commercial spatial computing implementation is that very few people will be able to afford it. The prohibitive price of the Apple Vision Pro marks it as a luxury item, and its high-quality design definitely helps cement that image even further. This is nothing new for Apple, of course, but it does severely limit how spatial computing will grow. Compared to more affordable platforms like the Meta Quest, it might be seen as something that benefits only the elite, despite the technology having even more implications for the masses. That, in turn, is going to make people question whether the Vision Pro would be such a wise investment, or whether they should just wait it out until prices become more approachable.

The post 5 Ways Spatial Computing Will Succeed and 5 Ways It Will Flop first appeared on Yanko Design.

0 Commentaires